API Load Testing: A Key to Enhancing System Performance

This article has offered valuable insights and practical guidance for improving your API load testing practices.

What is Load Testing?

Load testing evaluates an API's performance under normal and peak load conditions to determine its capacity. The objective is to find the maximum number of requests the API can process while maintaining optimal performance.

Typically, we can use tools for load testing, such as Apache JMeter, Gatling, BlazeMeter, and EchoAPI.

Why Do We Conduct Load Testing?

To ensure smooth user experiences, aid capacity planning, identify bottlenecks, and validate updates, load testing is critical for assessing API performance under various conditions.

- Performance Evaluation: Understand the API’s performance under various loads and verify whether it meets business requirements.

- Capacity Planning: Analyze system load to assist with infrastructure scaling when necessary.

- Bottleneck Identification: Discover potential performance bottlenecks for future optimization.

- User Experience Assurance: Ensure that users consistently receive a smooth experience when using the API, enhancing customer satisfaction.

- Regression Testing: Validate that code updates do not negatively impact existing performance.

Key Metrics to Monitor During Load Testing

When conducting load tests, it’s important to monitor several key metrics:

- Response Time: The duration the API takes to process requests, encompassing Time to First Byte (TTFB) and total response time.

- Throughput: The number of requests handled per unit of time, typically measured in QPS (queries per second) or RPS (requests per second).

- Error Rate: The proportion of error responses from the API under high load, reflecting system stability.

- Resource Utilization: Monitor CPU, memory, disk I/O, and network bandwidth usage to identify performance issues due to resource constraints.

- Concurrent Users: The number of users that can access the API simultaneously, helping assess system scalability.

Quickly Validate API Performance in the Development Phase with EchoAPI

EchoAPI is a comprehensive API development tool that covers API Debugging, API Design, Load Testing, Documentation, and Mock Servers. This allows for both interface debugging and load testing within a single tool, eliminating the need to switch between applications.

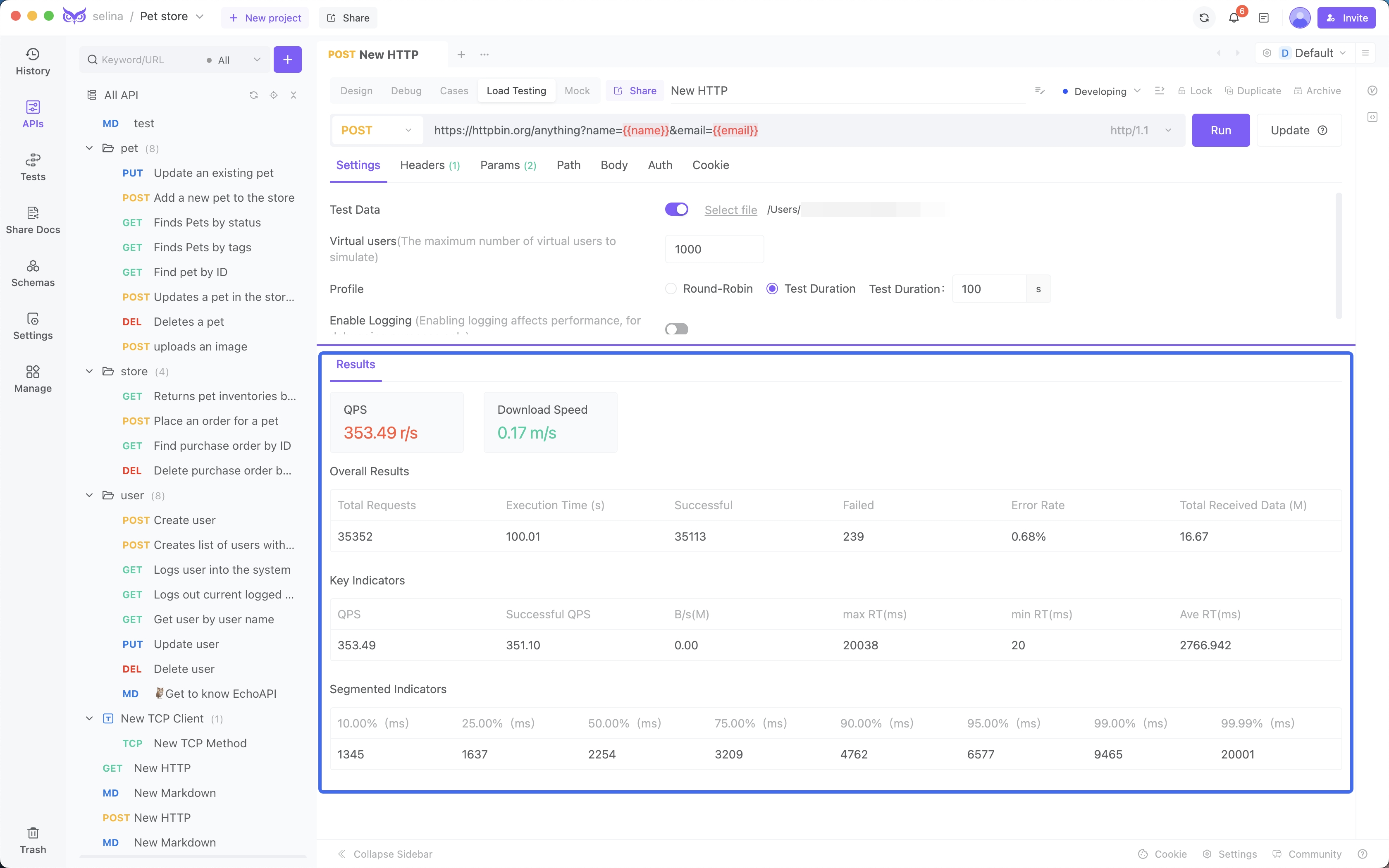

Example: Conducting Load Testing with 1000 Concurrent Users

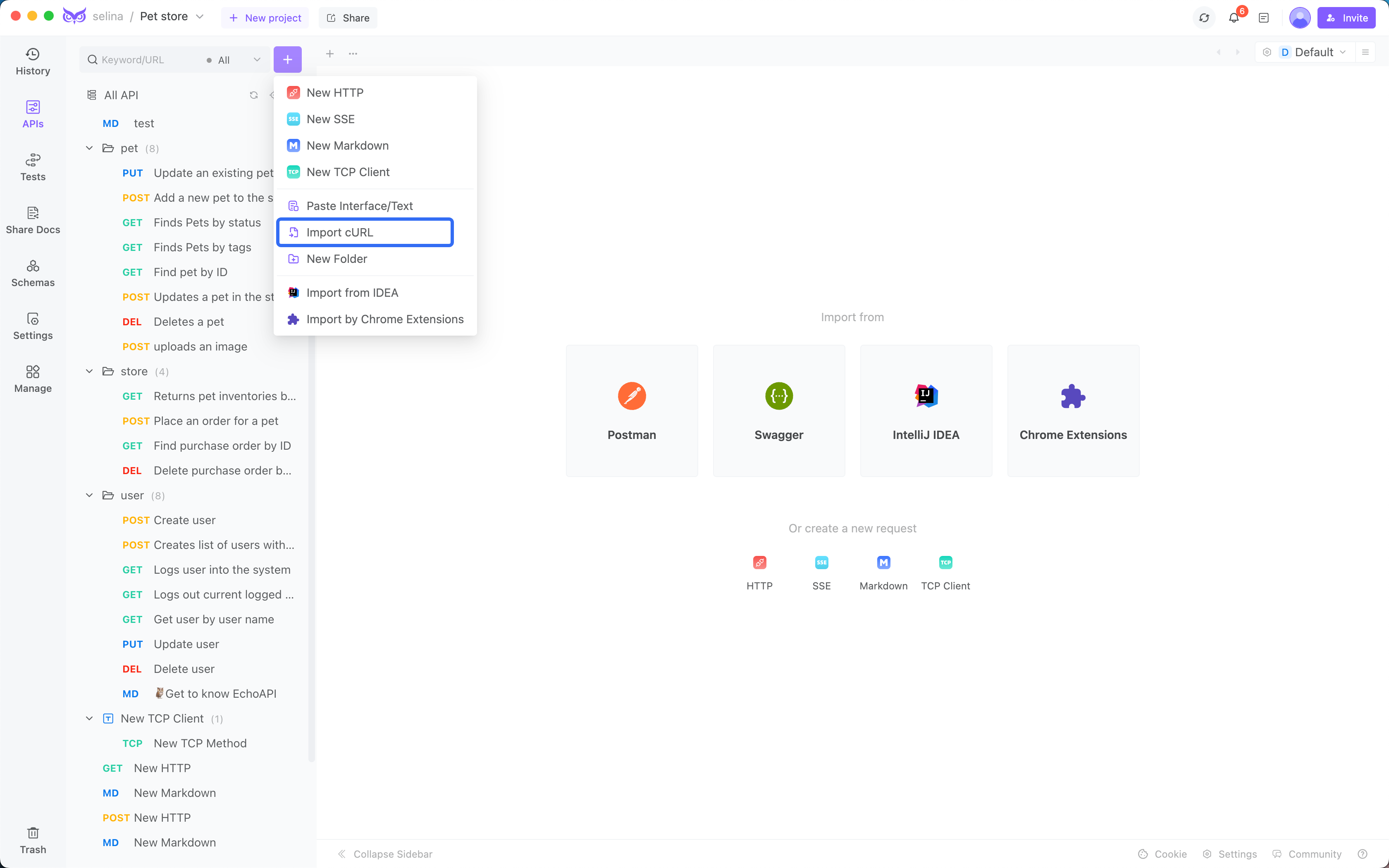

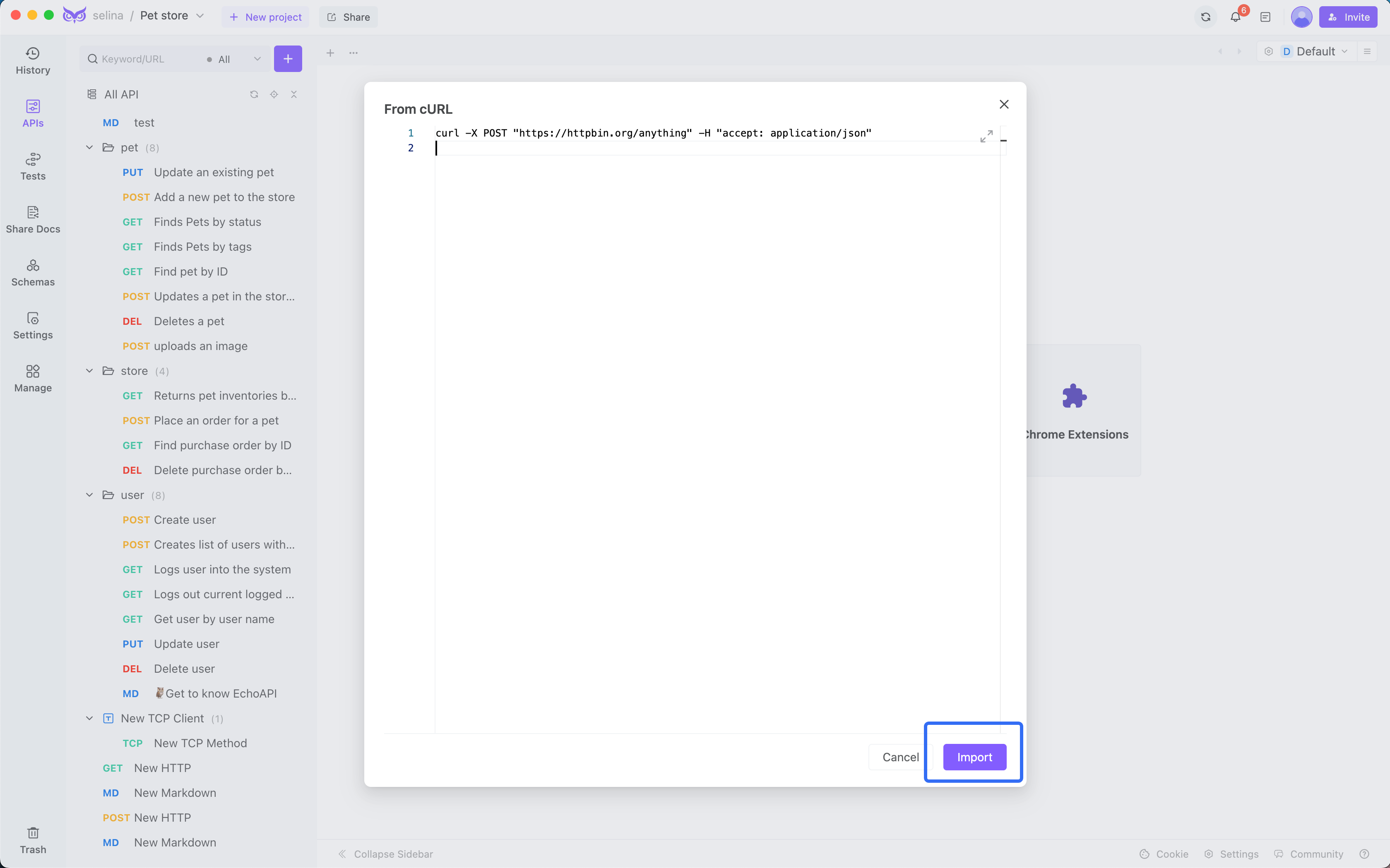

1. Create an Interface: Import via curl.

curl -X POST "https://httpbin.org/anything" -H "accept: application/json"

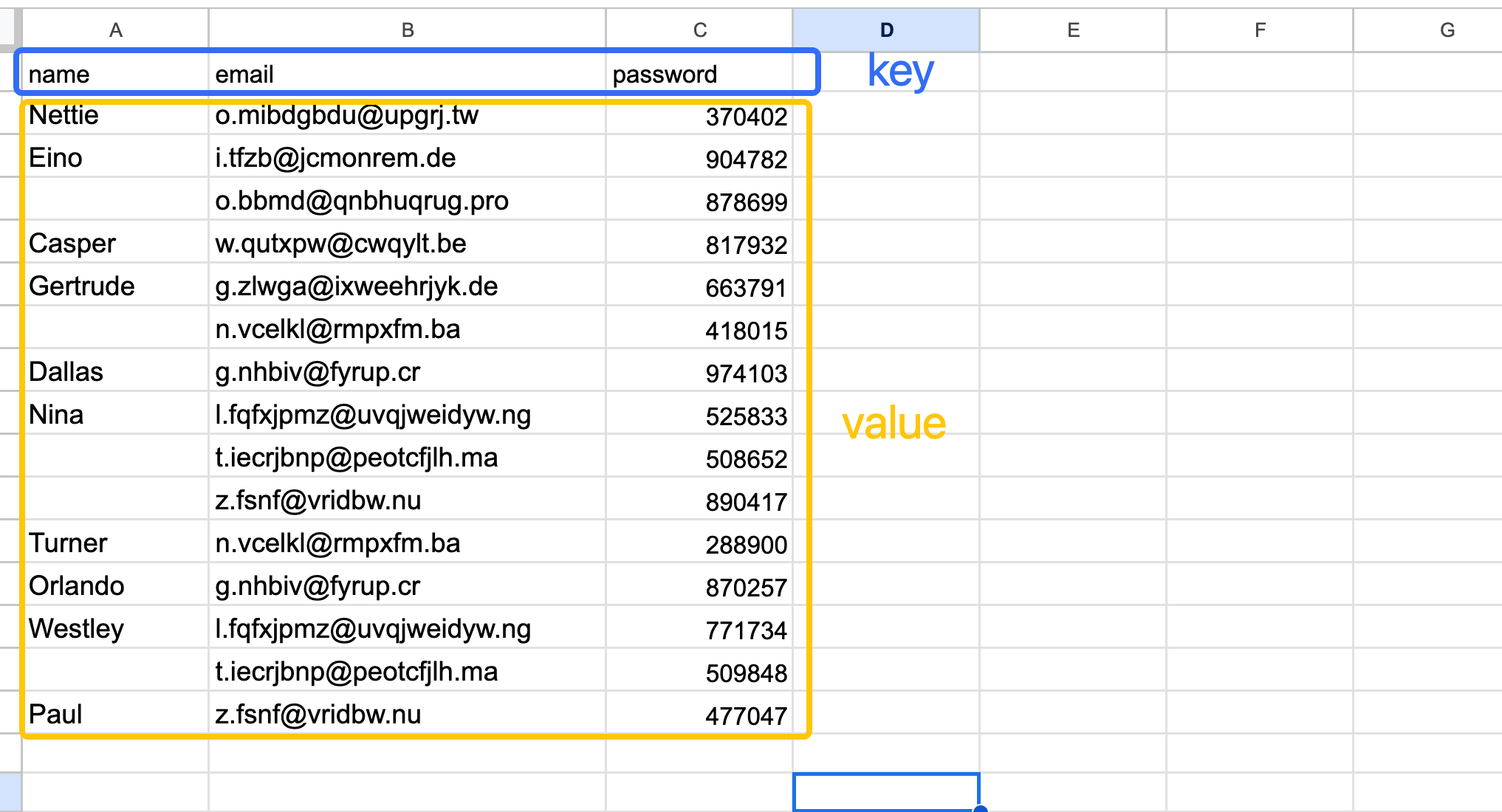

2. Prepare Test Data: As shown in the interface.

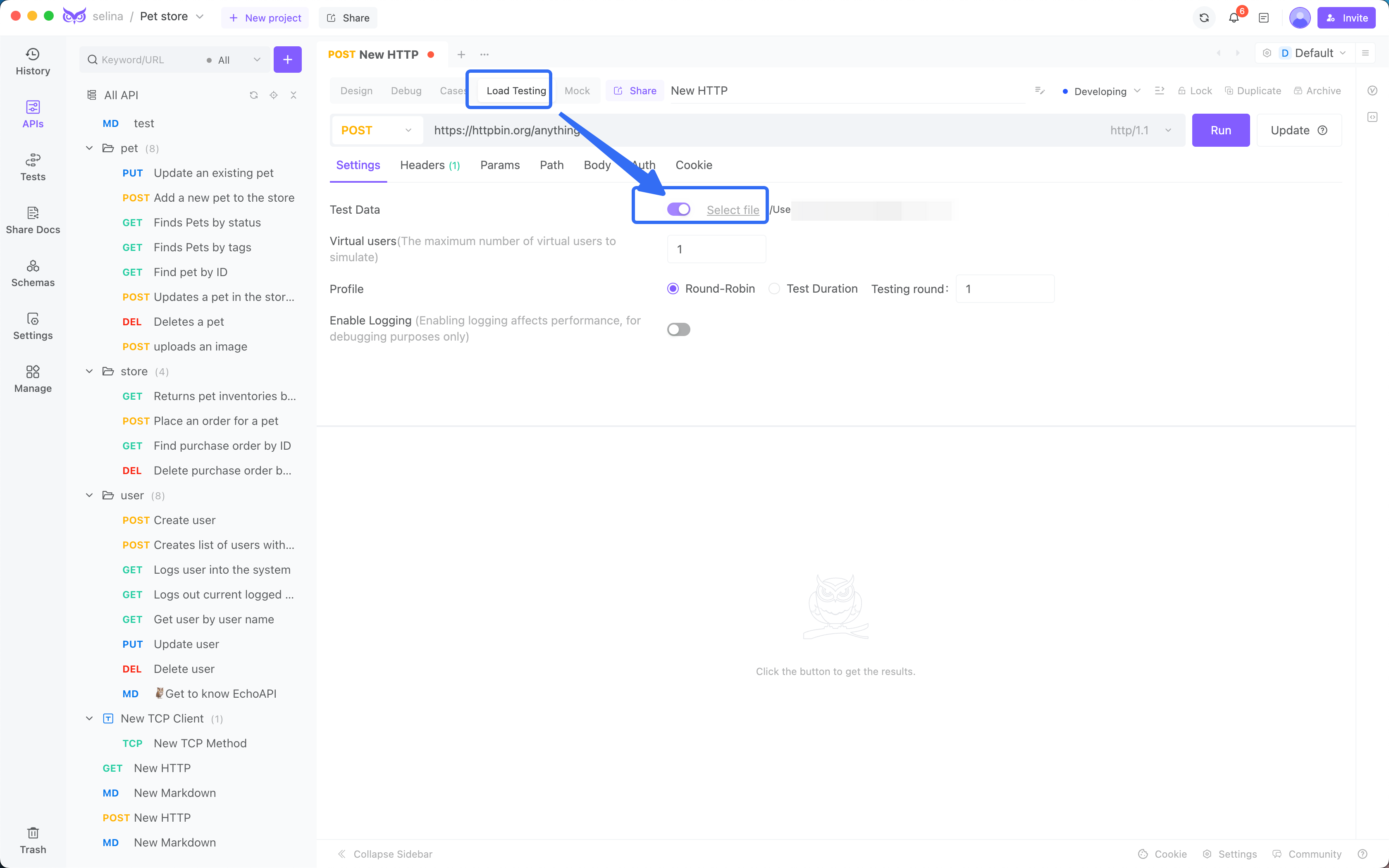

3. Upload Test Data: Proceed with the data upload.

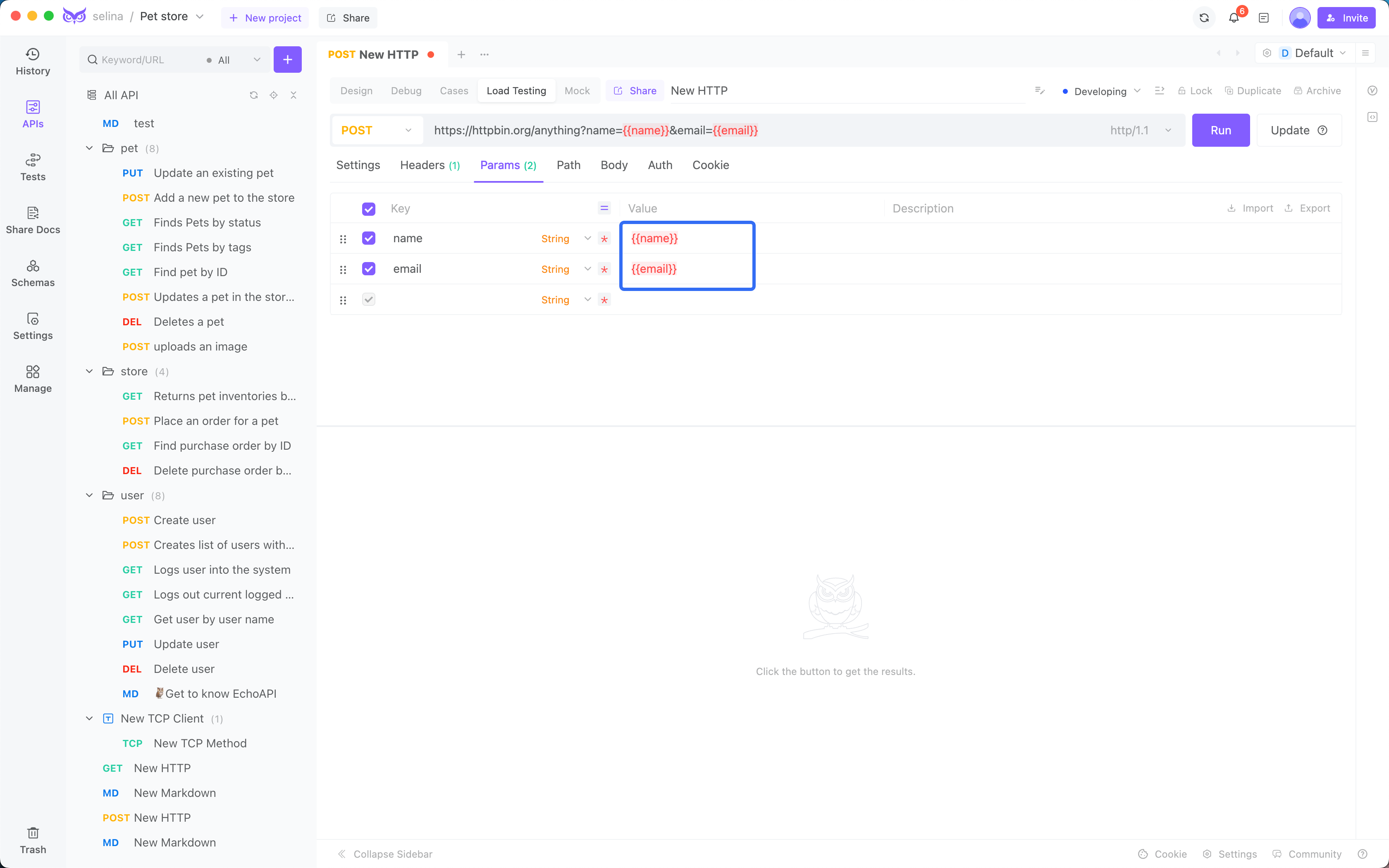

4. Reference Test Data in Parameters: Use the {{}} syntax to reference test data in request parameters.

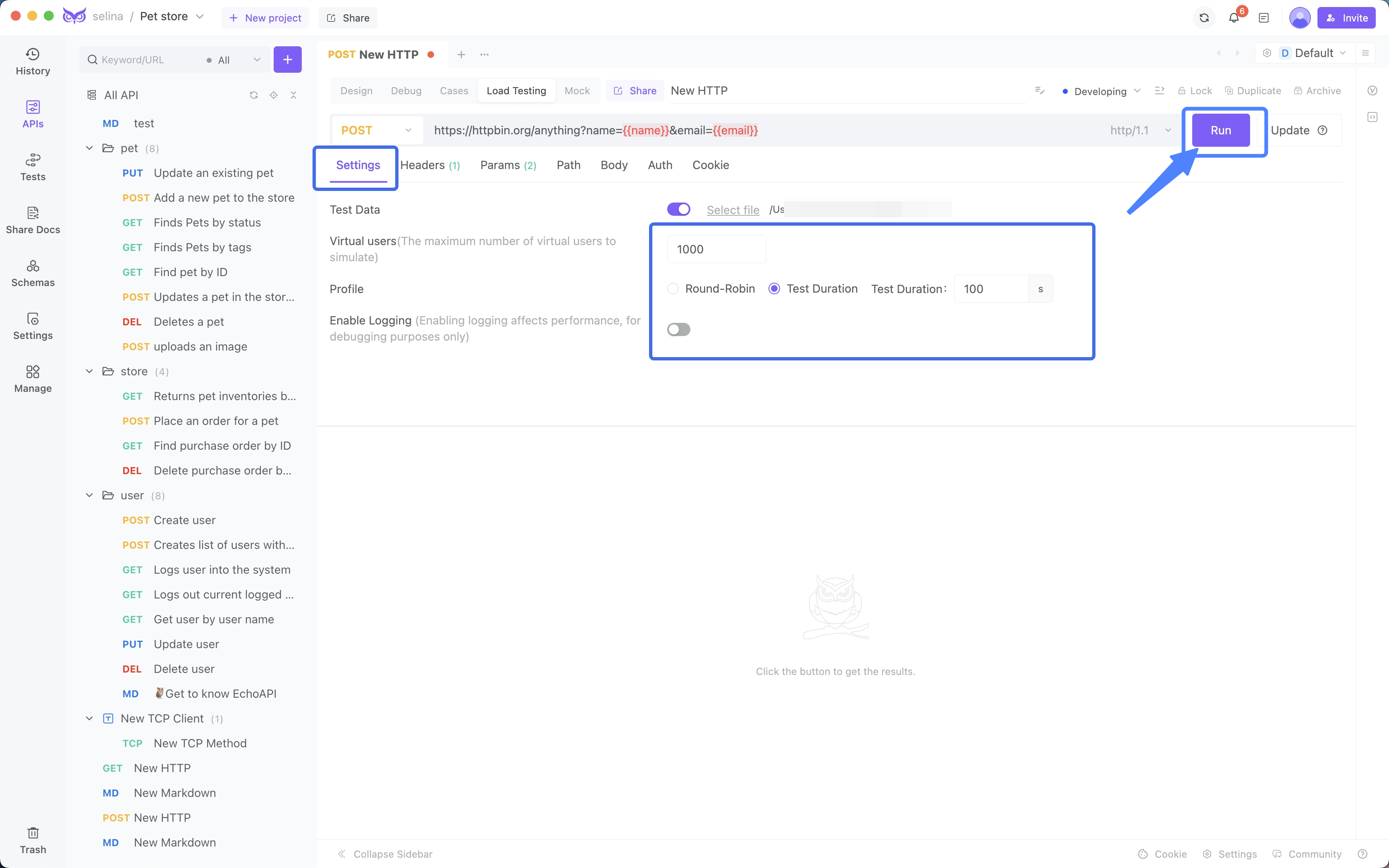

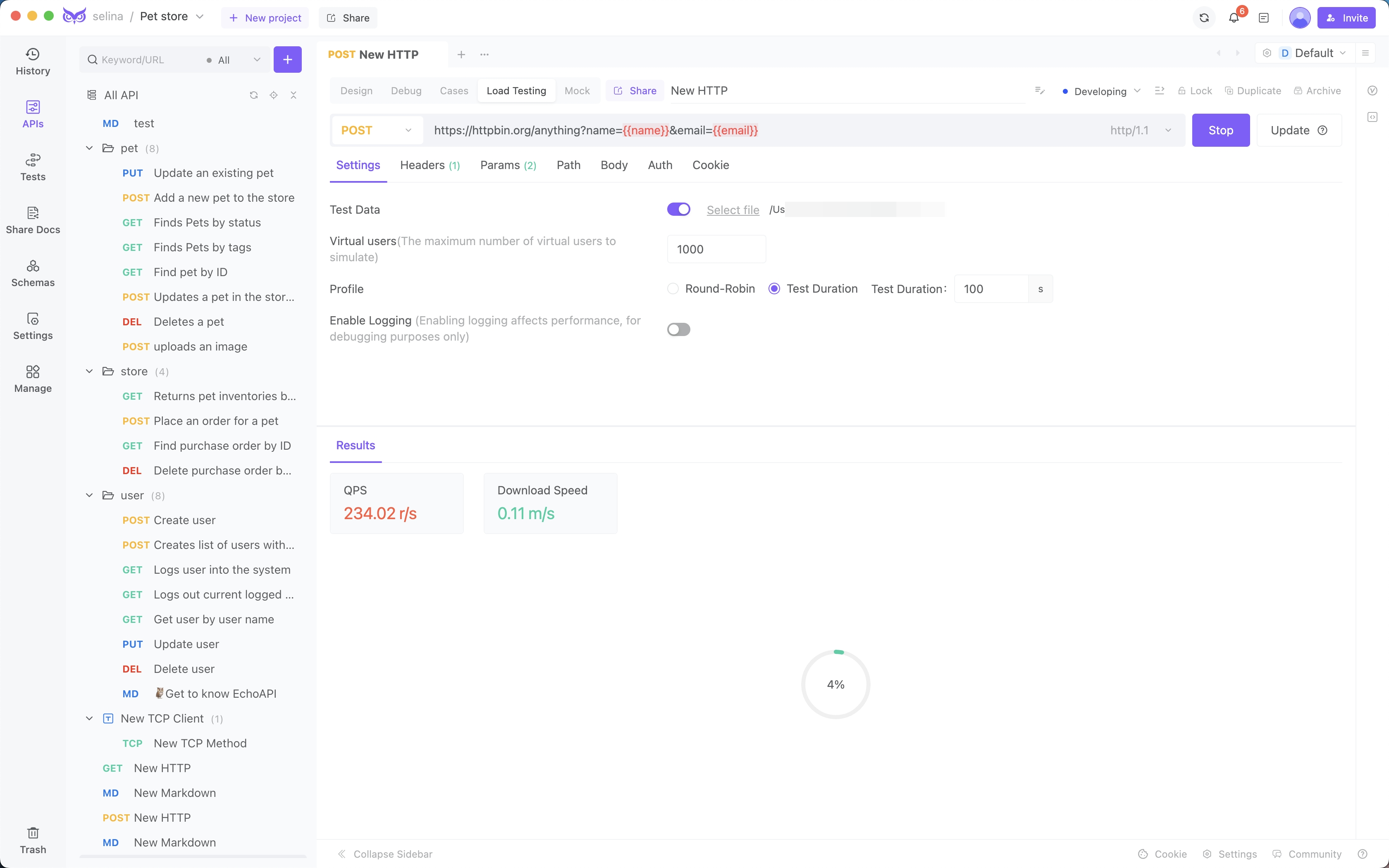

5. Configure the Load Test and Review Results: Initiate the load test and review the results to assess performance metrics.

Testing

Results

Load testing result calculation method

| Metric | Meaning | Calculation Method |

|---|---|---|

| Total Requests | Total number of requests sent | Concurrent Users * Rounds |

| Execution Time | Total time taken to complete the load test | Task End Time - Task Start Time |

| Successful Requests | Number of HTTP requests that received a 200 status code | N/A |

| Failed Requests | Number of HTTP requests that returned non-200 status codes or experienced connection errors | N/A |

| Error Rate | Percentage of errors encountered during load testing | (Failed Requests / Total Requests) * 100 |

| Total Received Data | Total size of data received in bytes | Sum of byte sizes of all returned responses |

| Requests Per Second | Average number of requests processed per second | Total Requests / Total Time |

| Successful Requests Per Second | Average number of successful requests processed per second | Successful Requests / Successful Time |

| Bytes Received Per Second | Average amount of data received per second | Total Received Data / Total Time |

| Maximum Response Time | Longest time taken for a single request to complete | Longest execution time among all requests |

| Minimum Response Time | Shortest time taken for a single request to complete | Shortest execution time among all requests |

| Average Response Time | Average time taken for requests | Total Time / Total Requests |

| 10th Percentile | Time taken for the fastest 10% of requests | Execution time sorted in ascending order, take the 10% position |

| 25th Percentile | Time taken for the fastest 25% of requests | Execution time sorted in ascending order, take the 25% position |

| 50th Percentile | Time taken for the fastest 50% of requests | Execution time sorted in ascending order, take the 50% position |

| 75th Percentile | Time taken for the fastest 75% of requests | Execution time sorted in ascending order, take the 75% position |

| 90th Percentile | Time taken for the fastest 90% of requests | Execution time sorted in ascending order, take the 90% position |

| 95th Percentile | Time taken for the fastest 95% of requests | Execution time sorted in ascending order, take the 95% position |

Conclusion

Load testing is crucial for guaranteeing API availability, optimizing performance, and enhancing user experience. By implementing a strong testing strategy, focusing on key metrics, and leveraging the right tools, development teams can efficiently pinpoint and resolve performance bottlenecks, maintaining a competitive advantage. We trust this article has offered valuable insights and practical guidance for improving your API load testing practices.

EchoAPI for VS Code

EchoAPI for VS Code

EchoAPI for IntelliJ IDEA

EchoAPI for IntelliJ IDEA

EchoAPl-Interceptor

EchoAPl-Interceptor

EchoAPl CLI

EchoAPl CLI

EchoAPI Client

EchoAPI Client API Design

API Design

API Debug

API Debug

API Documentation

API Documentation

Mock Server

Mock Server