RAG vs. CAG: Key Differences and How EchoAPI AI-Driven API Testing Enhances Your Workflow

Understanding the differences between RAG and CAG is crucial for businesses leveraging AI in their applications.

When it comes to integrating generative AI models into enterprise workflows, two common paradigms have emerged: Retrieval-Augmented Generation (RAG) and Cache-Augmented Generation (CAG). Both approaches enhance the capabilities of large language models (LLMs), but they do so in fundamentally different ways. Understanding these differences is crucial for businesses looking to leverage AI for their applications, especially in API testing and development.

In this article, we’ll explore the key distinctions between RAG and CAG and discuss how EchoAPI AI-powered API testing solutions can help streamline testing and improve software quality.

![RAG vs. CAG: Key Differences]

(https://assets.echoapi.com/upload/user/222825349921521664/log/324944e8-f2dc-4718-b6ef-7a6ea00cad20.png "image.png")

What is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) enhances the capabilities of LLMs by incorporating real-time retrieval of external data. In this model, the system fetches relevant information from an external source—such as a knowledge base, API, or database—during inference. The retrieved data is then processed by the language model to generate more accurate and contextually relevant responses.

How RAG Works:

- Retriever: The retriever searches external data sources for relevant information based on the query.

- Generator: The LLM synthesizes the retrieved data along with the query to produce a response.

Strengths of RAG:

- Dynamic Knowledge Retrieval: RAG can access updated or real-time data, making it ideal for dynamic and evolving knowledge domains.

- Versatility: Works across various use cases, including customer support, content generation, and more.

Limitations of RAG:

- Retrieval Latency: Real-time data fetching introduces delays, which can be a problem in latency-sensitive applications.

- Document Selection Errors: The quality of results depends heavily on how relevant the retrieved documents are.

What is Cache-Augmented Generation (CAG)?

Cache-Augmented Generation (CAG), on the other hand, simplifies the knowledge integration process by preloading all relevant data into the model’s memory before inference. Instead of retrieving data during inference, CAG relies on a precomputed cache, which allows the model to generate responses without any delay.

How CAG Works:

- Preloading Knowledge: Relevant data is pre-loaded into the model’s memory before the inference process begins.

- Key-Value (KV) Cache: This cache stores the model’s inference state and is used during inference to generate responses.

Strengths of CAG:

- Efficiency: No retrieval latency, making CAG ideal for applications that require fast responses.

- Accuracy and Simplicity: By ensuring all data is preloaded and contextually relevant, CAG reduces the chances of errors in response generation.

Limitations of CAG:

- Static Knowledge Base: The knowledge base must be predefined, making CAG less suitable for domains requiring constant updates.

- Memory Constraints: CAG is limited by the model’s ability to process large volumes of data in its context window.

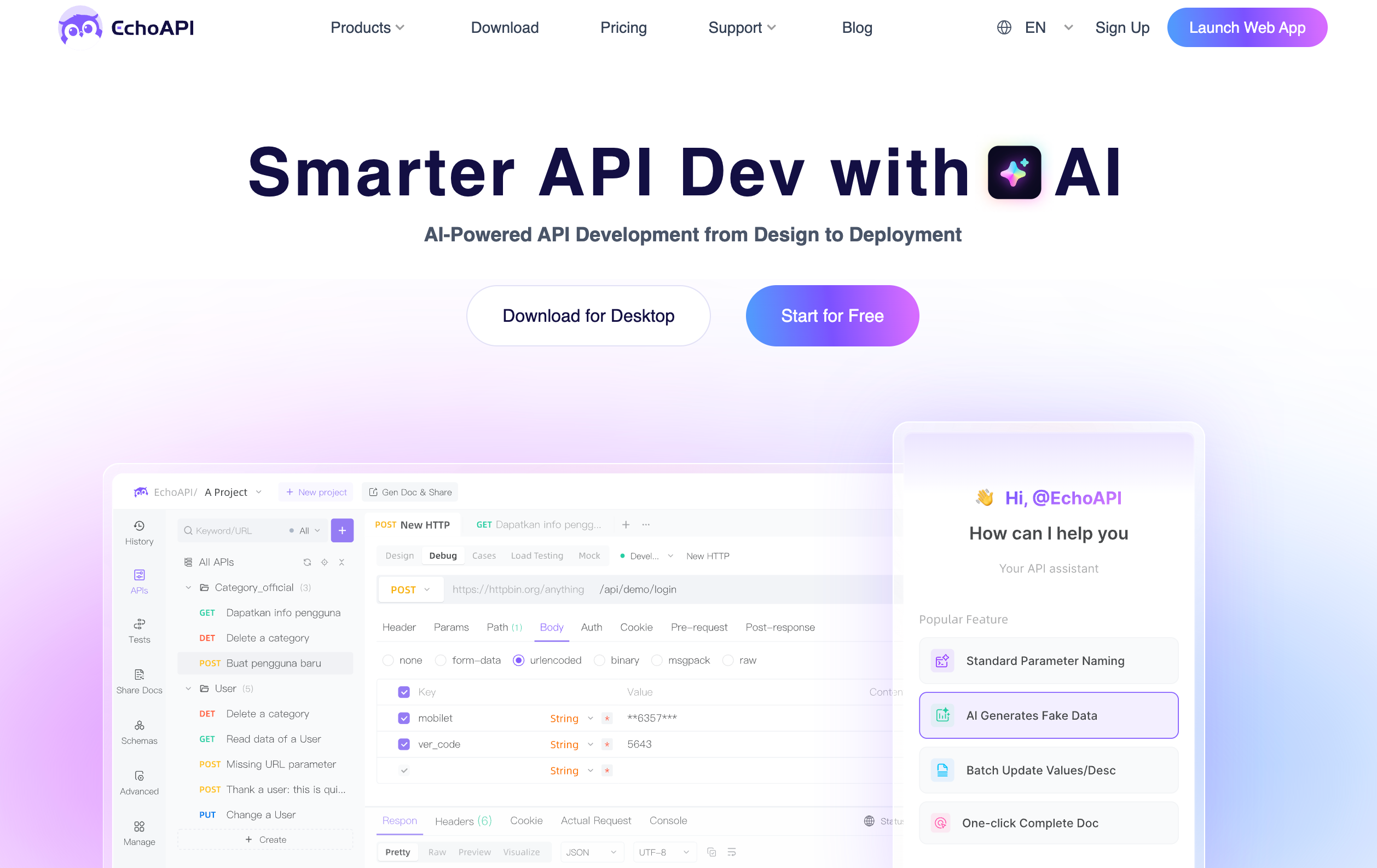

How EchoAPI AI-Powered API Testing Solution Helps

EchoAPI AI-driven API testing platform is designed to maximize the strengths of both RAG and CAG, delivering comprehensive test suites with zero manual hassle. Here’s how our product stands out:

1. Instant AI-Generated Test Suites with Flawless Coverage:

- With a simple click, EchoAPI AI generates complete test suites, covering edge cases, security, performance, and more.

2. AI Blocks Bugs Before Production, Preventing Errors:

- EchoAPI AI-driven testing platform identifies and blocks over 61.4% of hidden bugs before they reach production. This proactive approach reduces potential issues associated with data retrieval errors, which can happen in RAG setups.

3. Smarter AI Debugging, Pinpointing Issues:

- By auto-generating reports and pinpointing root causes, EchoAPI platform streamlines debugging, saving developers significant time. This capability can integrate well with both CAG and RAG by automating the error identification process in test suites.

4. AI-Driven Standard Parameter Naming, No More Guesswork:

- AI auto-generates standard and self-explanatory parameter names for your API endpoints, eliminating guesswork and ensuring consistency, which is crucial for both CAG and RAG workflows.

5. Instant, Real-World Data, AI-Generated:

- EchoAPI platform generates production-grade test data that mocks real-world complexity, similar to the preloading process in CAG. This enables flawless API testing without needing to retrieve data on the fly.

6. AI One-Click Bulk Sync, No More Manual Hassle:

- With the ability to update hundreds of parameters at once, EchoAPI system automates the tedious process of syncing test data, ensuring rapid test execution and consistency in results.

7. AI-Optimized API Docs in Seconds, Ready for Sharing:

- EchoAPI AI optimizes and formats API documentation automatically, making it easy for developers to share API specs with stakeholders, further improving testing and collaboration.

Conclusion

The decision to use CAG or RAG depends largely on your specific use case—whether you need real-time updates or whether speed and simplicity are paramount.

CAG offers faster and more efficient testing when the data is static and predefined, while RAG provides flexibility for testing APIs that require real-time, dynamic data.

With EchoAPI AI-powered API testing platform, you can take advantage of the best features of both paradigms, ensuring flawless coverage, faster execution, and proactive error detection.

By leveraging the power of AI-driven test suites and preloaded knowledge, we help you deliver higher-quality, bug-free APIs without the manual hassle, no matter the paradigm you choose.

EchoAPI for VS Code

EchoAPI for VS Code

EchoAPI for IntelliJ IDEA

EchoAPI for IntelliJ IDEA

EchoAPl-Interceptor

EchoAPl-Interceptor

EchoAPl CLI

EchoAPl CLI

EchoAPI Client

EchoAPI Client API Design

API Design

API Debug

API Debug

API Documentation

API Documentation

Mock Server

Mock Server