A2A vs. MCP: Understanding the Differences and When to Use Each

This article explores the distinctions between the Agent2Agent (A2A) protocol and the Model Context Protocol (MCP), both crucial in AI interactions. It guides readers on selecting the appropriate protocol based on their AI implementation needs.

As AI continues to make its way deeper into business workflows, the need for seamless collaboration between multiple AI agents (agents) has become a major challenge.

To address this, Google Cloud introduced an open protocol called Agent2Agent (A2A), designed to enable AI agents across different platforms and systems to work together efficiently.

In this article, we’ll break down what A2A is, how it changes the way we interact with AI agents, and its relationship to API development. We’ll also dive into Model Context Protocol (MCP), a related concept, and clarify the differences between A2A and MCP, helping you understand when to use one over the other.

What is the A2A Protocol?

Simply put, A2A (Agent-to-Agent) is a standardized communication protocol that allows different AI agents to collaborate and exchange information. Think of it like how humans communicate through phones or emails, but in this case, it's AI agents communicating with each other, using a common language and set of rules. This makes it possible for agents across different platforms and applications to interoperate and accomplish more complex tasks together.

A2A is not a closed system. It’s an open standard, which means it encourages support from a wide variety of technical partners and allows the creation of a diverse ecosystem. Whether you're working with natural language processing, machine learning, or other AI technologies, agents using A2A can collaborate across different tech stacks and seamlessly work together in complex enterprise workflows.

The Role of APIs in A2A

You may be wondering, what role does an API (Application Programming Interface) play in all this? Well, APIs are one of the core components of the A2A protocol. At its heart, A2A is an API-based standard. It defines how AI agents should interact via APIs, including data transfer, task management, and feedback coordination. Some key API operations in A2A include:

- Capability Discovery: Just like APIs allow you to query a service to check its features, AI agents can "announce" their capabilities via an API in a standardized format (often represented as an "Agent Card"). This enables agents to discover each other’s functions and collaborate accordingly.

- Task Management: Through APIs, agents can send task requests to one another, track the progress of tasks, and handle results. For example, a "Customer Support Agent" might request a "Recommendation System Agent" to analyze a customer's purchasing history and provide personalized suggestions.

- Collaboration: Agents use APIs to send messages, share data, provide context, and even deliver completed work. This exchange is not limited to text; it can also involve audio, video, and other data formats.

- User Experience Negotiation: APIs allow agents to negotiate the final output with one another. For example, they might collaborate on choosing the best format for the user’s results (HTML, images, etc.).

All of this relies on well-defined APIs to ensure agents can “understand” each other’s capabilities and tasks, enabling smooth collaboration without interference.

A2A Protocol Design Principles

When designing A2A, Google and its partners kept a few core principles in mind:

- Seamless Collaboration: A2A supports collaboration across a wide range of AI technologies and architectures, from text-based communication to multimedia support like audio and video.

- Security: A2A implements enterprise-grade authentication and authorization mechanisms, ensuring that data exchange remains secure and confidential.

- Support for Long-Running Tasks: A2A supports long-running processes, meaning it can handle tasks that take from a few hours to several days or even weeks. The protocol ensures that tasks are continuously updated and managed through the API.

- Compatibility: A2A is compatible with common tech standards like HTTP and JSON-RPC, which means it integrates smoothly into existing IT ecosystems, reducing the complexity of integration for businesses.

A2A and API Testing

To ensure A2A protocols work as expected, we need effective API testing. This ensures that AI agents can collaborate as planned, without any communication breakdowns. Key steps in API testing for A2A include:

- Functional Testing: Verify that the API correctly supports tasks like managing tasks, discovering capabilities, and passing messages. This ensures that the core features of A2A are functional.

- Security Testing: Ensure that data exchanged via the API is encrypted and protected from potential breaches or tampering. Security is critical in enterprise environments, and API testing will confirm this.

- Load and Performance Testing: A2A is designed to handle long-running tasks, so it’s important to test how the system performs under high loads and over extended periods of time.

- Compatibility Testing: Since A2A is an open standard, the API must work seamlessly with multiple platforms and technologies. Compatibility testing ensures that AI agents can connect to each other regardless of the tech stack they use.

A2A vs. Model Context Protocol (MCP)

Now, let’s talk about Model Context Protocol (MCP), which is another important protocol in the AI ecosystem. While A2A focuses on enabling seamless collaboration between AI agents, MCP is designed to provide better contextual understanding of tasks for individual AI models. Let’s explore the differences:

When to Use A2A:

- Collaborating Across Agents: Use A2A when multiple AI agents need to work together on a task. This includes scenarios where different agents have specialized capabilities (e.g., a customer service bot, a recommendation system, and a logistics assistant).

- Cross-System Communication: If your organization has agents operating on different platforms or tech stacks, A2A helps them communicate and collaborate.

- Task Management: If you need AI agents to manage complex workflows, A2A can coordinate the tasks and track progress.

When to Use MCP:

- Enhancing Model Understanding: MCP is ideal when an AI model needs more detailed, accurate, and contextual information to make better decisions or perform complex reasoning.

- Contextual Assistance: Use MCP when an AI model requires specific context about the task it’s performing, such as understanding the historical background, previous interactions, or the goals of the task.

- Single-Agent Context: Unlike A2A, which involves collaboration, MCP is primarily used to enhance a single agent’s understanding of the environment in which it operates. It provides the agent with better context, improving its decision-making ability.

Key Differences:

| Feature | A2A Protocol | MCP |

|---|---|---|

| Primary Focus | Collaboration between multiple agents | Contextual understanding for individual agents |

| Usage | Multiple agents coordinating on tasks | Enhances an agent’s task execution through context |

| Collaboration | Yes, allows agents to work together | No, focuses on enhancing individual agent performance |

| Context Awareness | Not as deep—focuses on task coordination | Deep, rich context for decision-making |

| Task Management | Yes, supports task delegation and management | No, focuses on contextual awareness for a single agent |

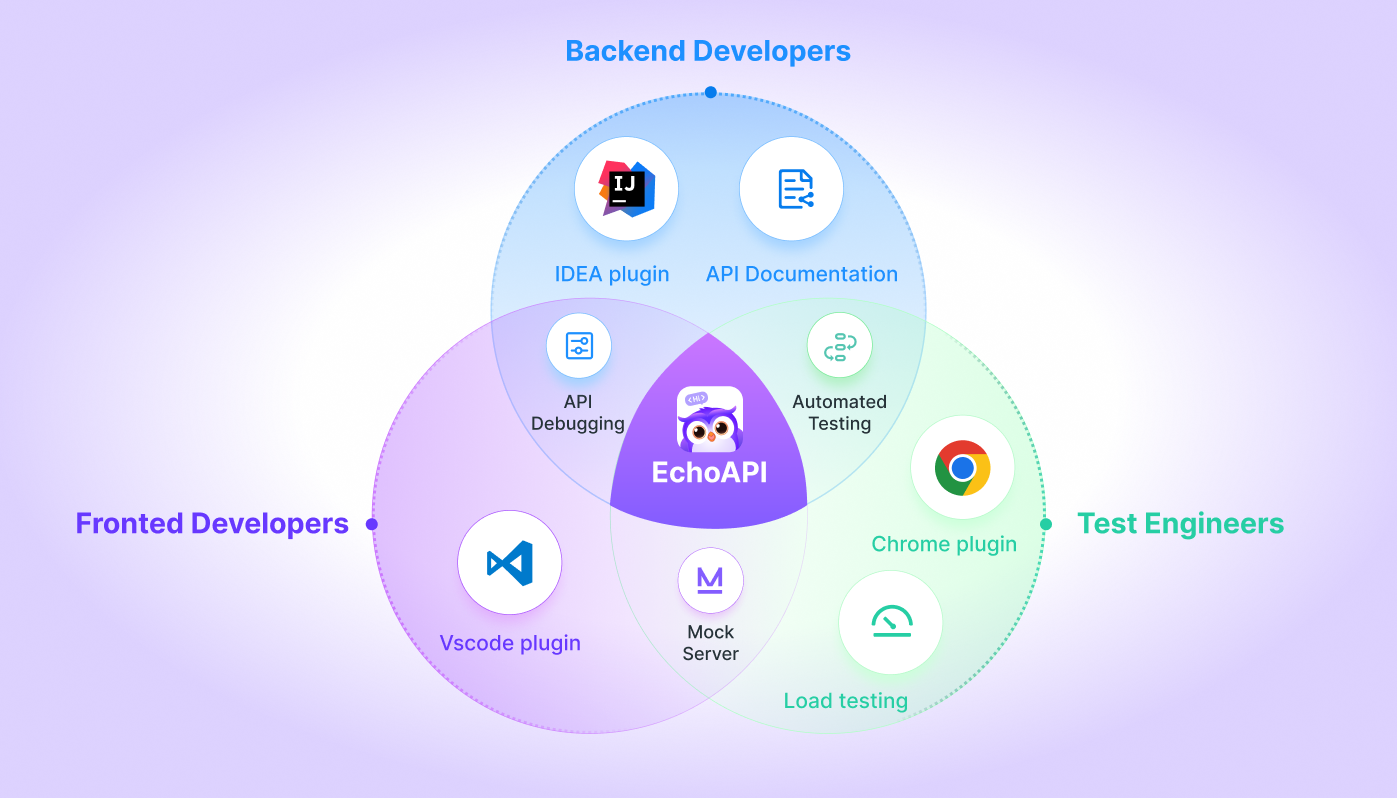

Why EchoAPI is Crucial for MCP and A2A Integration

When working with cutting-edge AI protocols like Model Context Protocol (MCP) and Agent-to-Agent (A2A), the need for robust and efficient API management becomes critical. EchoAPI stands out as a comprehensive API solution that perfectly complements both MCP and A2A implementations by streamlining design, testing, and collaboration across complex AI ecosystems. Here’s why EchoAPI is an essential tool for these protocols:

1. Unified API Platform for Seamless Integration

A2A and MCP both rely heavily on API interactions—whether it's agents collaborating in real-time or a single model enhancing its contextual understanding. EchoAPI’s all-in-one platform enables you to design, test, and debug APIs across various communication protocols like HTTP, WebSocket, and GraphQL. This ensures smooth and efficient integration between different AI agents and models, which is crucial for both A2A’s multi-agent collaboration and MCP’s deep contextual processing.

2. Smart Authentication and Cross-Tool Compatibility

With AI-driven systems often requiring secure, scalable authentication mechanisms like OAuth 2.0, JWT, and AWS Signature, EchoAPI supports advanced security protocols that make sure agents and models can communicate securely in A2A or work within a protected environment in MCP. Plus, EchoAPI’s ability to import/export projects from popular tools like Postman, Swagger, and Insomnia allows seamless collaboration between teams working with different platforms, ensuring that both A2A and MCP integrations are streamlined and hassle-free.

3. AI-Powered Imports for Effortless Integration

EchoAPI’s intelligent document recognition tools allow you to automatically convert API documentation into actionable interfaces, which saves valuable time. This is especially useful in A2A where multiple agents from different systems need to work together, and in MCP, where precise context mapping is essential for model optimization. EchoAPI makes it easy to quickly set up and adapt your API definitions to suit evolving AI workflows.

4. Offline Support for Continuous Development

Whether you’re working on an A2A collaboration project with multiple agents or developing an MCP-enhanced AI model, EchoAPI’s offline support allows you to continue working without an internet connection. This flexibility ensures that teams can stay productive no matter where they are, ensuring that your API development and testing progress seamlessly.

5. Easy Team Collaboration

The real-time collaboration feature in EchoAPI is a game-changer for teams working on complex A2A or MCP projects. Instant synchronization of data, along with the ability to share progress easily, ensures that all team members, whether they’re testing agent interactions or refining model context, are always on the same page. This fosters efficient communication and speeds up development cycles, which is crucial when dealing with intricate AI integrations.

By combining all of these features, EchoAPI provides the perfect API toolkit for the A2A and MCP protocols, making it easier to build, test, and maintain collaborative AI systems that are scalable, secure, and efficient. Whether you're integrating multiple AI agents or enhancing a model's contextual intelligence, EchoAPI’s seamless and powerful API solutions ensure that your AI workflows are optimized and future-proofed.

Conclusion: A2A vs. MCP — When to Use Each

In summary, A2A and MCP are complementary but serve different purposes:

- A2A is your go-to protocol when you need AI agents to collaborate, share tasks, and work together seamlessly. It ensures agents from different systems and platforms can communicate with each other and coordinate tasks.

- MCP is designed to give individual AI models richer context, helping them make more informed decisions and handle complex tasks more effectively. It’s all about enhancing an agent’s understanding of its environment and goals.

As AI systems become increasingly complex, knowing when to use A2A and when to apply MCP will be crucial to building efficient, scalable, and intelligent AI-driven systems.

EchoAPI for VS Code

EchoAPI for VS Code

EchoAPI for IntelliJ IDEA

EchoAPI for IntelliJ IDEA

EchoAPl-Interceptor

EchoAPl-Interceptor

EchoAPl CLI

EchoAPl CLI

EchoAPI Client

EchoAPI Client API Design

API Design

API Debug

API Debug

API Documentation

API Documentation

Mock Server

Mock Server