Why Your API Will Fail Without These 2 Critical Shields? Master Rate Limiting & Error Handling Now

The success of an API is not achieved in a day. In this article, we will explain the importance of rate limiting and error handling, demonstrating how these implementations enhance the stability of APIs and improve user experience.

The Silent Killers of APIs

Imagine this scenario: You’ve spent weeks, maybe months, building a sleek API. Every single endpoint works flawlessly—you've tested it over and over again. Your responses are pristine JSON objects, your authentication feels as solid as Fort Knox, and your response times are so fast you'll swear you've defied the laws of physics. In short, you’re ready to launch.

But then—BAM—the reality of real-world usage smacks you in the face:

- In the middle of the night, your server collapses under the weight of a single client sending 10,000 requests per minute, because, hey, why not?

- A small typo in a query parameter turns into a massive 500 Internal Server Error, accidentally exposing sensitive database credentials to the entire world.

- Suddenly, your once-"reliable" API is the punchline of a meme spreading like wildfire on Reddit.

The truth is, no matter how perfect your API seems in controlled environments, it's not just about functionality. It’s about resilience. Building something that works is child’s play. Building something that lasts—something that survives unexpected spikes in traffic, misuse, or even nefarious attacks—is what separates the pros from the amateurs.

The internet isn’t a safe and serene space. It’s a wild jungle filled with bad actors, poorly behaved clients, bots, and the occasional avalanche of legitimate user traffic. Your API doesn’t just need to work—it needs to wear bulletproof armor.

If you’re nodding already, here’s the good news: Together, we’re about to level up your API defenses.

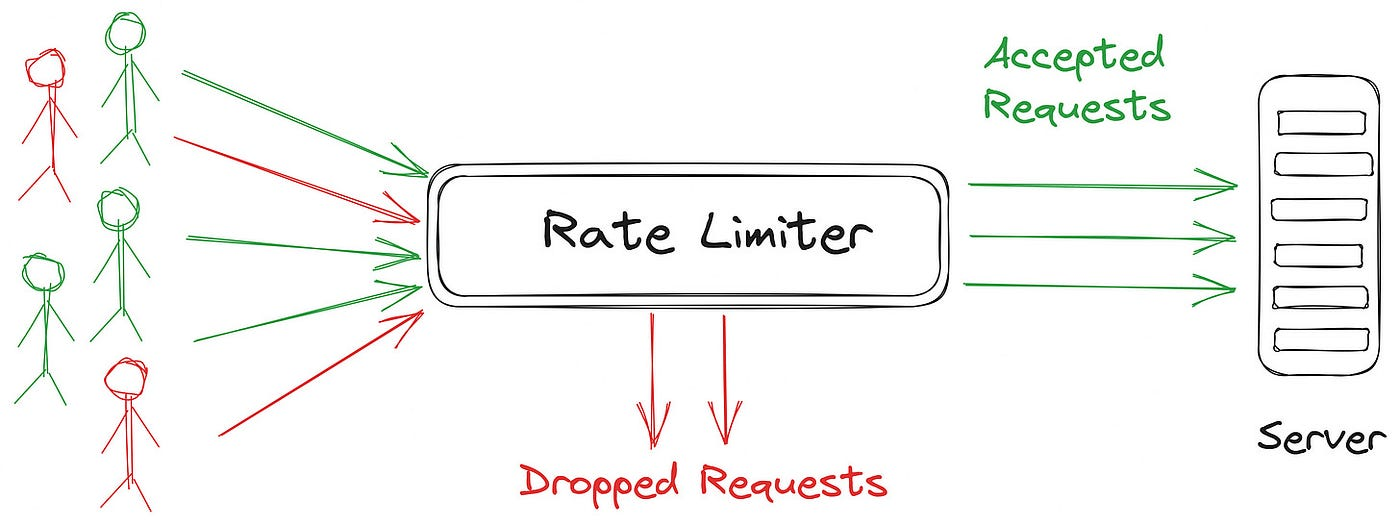

Part 1: Rate Limiting – Your API's First Line of Defense

Rate limiting isn’t just a feature—it’s your API’s front gate, security camera, and bouncer all rolled into one. Essentially, it ensures that no single client, malicious or otherwise, can overwhelm your service or ruin the party for others.

What Does Rate Limiting Solve?

Here’s what effective rate limiting brings to the table:

- Prevents server overloads: Protects your API from collapsing under heavy, sudden loads.

- Stops abusive users: Keeps bad actors and spamming bots in check.

- Ensures fairness: Allocates your resources evenly, so legitimate users always have access.

Without rate limiting, a single user—or even just a rogue script—can wreak havoc on your API. And once your server goes down, your real users aren’t going to wait around patiently—they’re going to move on to your competitor.

Step-by-Step Guide to Rate Limiting

Let’s explore a practical approach to implementing rate limiting, broken into bite-sized actions:

1. Start by Choosing the Right Strategy:

Different applications call for different rate limiting mechanisms. Here are the main options to consider:

- Token Bucket: Think of it as a bouncer at a nightclub. Users can show up and make requests, but only a certain number are allowed past the door each minute. This is the most common and flexible option.

- Fixed Window: It’s simple—“5 requests per 60 seconds” kind of thing. While easy to implement, it can occasionally allow small surges.

- Sliding Window Logs: If your API deals with high-value transactions or sensitive data, this is the precision scalpel of rate limiting. It considers a moving time window to ensure fairness.

2. Write the Code (Python Flask Example):

Here’s how you might implement rate limiting in Python:

from flask import Flask, jsonify

from flask_limiter import Limiter

from flask_limiter.util import get_remote_address

app = Flask(__name__)

limiter = Limiter(app=app, key_func=get_remote_address)

@app.route("/api/payments", methods=["POST"])

@limiter.limit("10/minute") # Critical rate limit set here!

def process_payment():

# Payment logic goes here

return jsonify({"status": "success"})

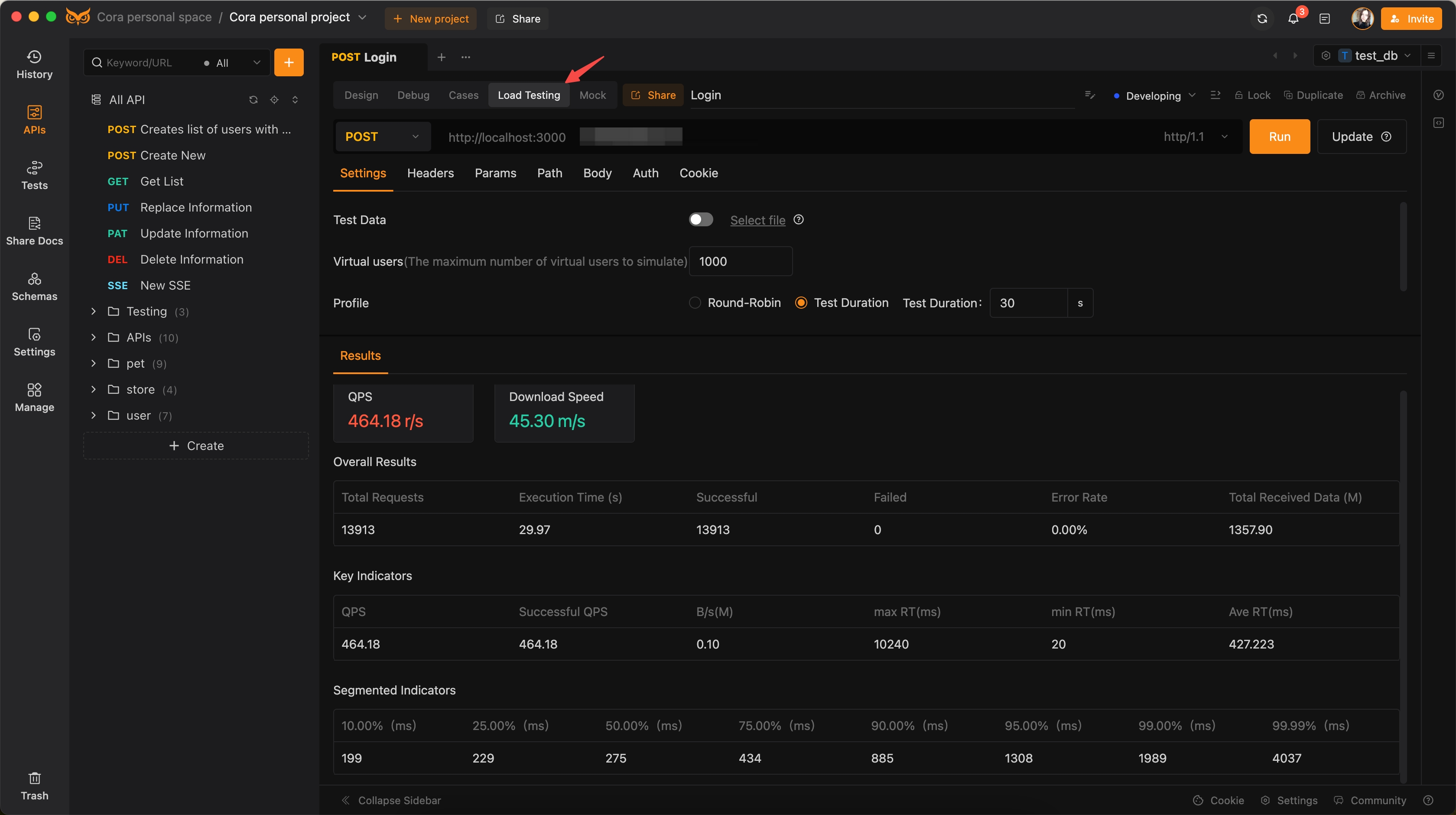

3. Test Your Setup Under Stress (with EchoAPI):

Testing doesn’t stop at "it works." You’ll need to run your API through its paces:

- Create a test project in EchoAPI.

- Set

POST /api/paymentsas the endpoint to simulate a flood of requests. - In Load Testing Mode, simulate 50 to 100 concurrent users hammering your endpoint.

- Watch as EchoAPI monitors rejected requests after 10 requests per user per minute.

Common Pitfalls to Watch Out For

Building rate limiting is just half the battle. To completely secure your API, avoid these mistakes:

- Forgetting to separate anonymous users from authenticated ones: Authenticated users should likely get higher limits.

- One-size-fits-all limits: Free-tier users vs. premium users need vastly different allowances.

- Leaving the client clueless about retry timing: Use the

Retry-Afterheader to guide users on when to try again.

Part 2: Error Handling – Reducing Chaos, One Error at a Time

Every API error is an opportunity—an opportunity to make debugging easier, improve user experience, and protect your system’s integrity. When errors are cryptic, your users become frustrated and your servers become harder to maintain. Smart error handling changes all that.

What Does Error Handling Solve?

If rate limiting stops harmful traffic, error handling makes sure that when something goes wrong—and trust us, it will go wrong—it’s clean, professional, and helpful. Specifically, it:

- Prevents leaked data: A poorly coded API might accidentally expose internal logs or sensitive information in error messages.

- Guides users during mistakes: Users need helpful and clear errors (“Please provide a valid email”), not a generic “Something went wrong.”

- Helps you debug faster: Detailed but securely logged errors can be your greatest ally in troubleshooting.

The 4 Golden Error Types You Need

To build robust APIs, you’ll need to master these essential error responses:

- 400 Bad Request: For issues like malformed JSON, missing parameters, or invalid data.

- 401 Unauthorized: When the client didn’t provide authentication credentials—or got them wrong.

- 429 Too Many Requests: Thanks to your shiny new rate limiter, this tells users when to slow down.

- 500 Internal Server Error: A catch-all for server-side issues (but handle it delicately, as you’ll see).

Build an Error Safety Net (Node.js Example)

Here’s an example of robust error handling in Node.js:

app.post('/api/upload', async (req, res) => {

try {

// Main logic goes here

} catch (error) {

if (error instanceof InvalidFileTypeError) {

return res.status(400).json({

code: "INVALID_FILE",

message: "Only .png or .jpeg files are allowed",

docs: "https://api.yoursite.com/errors#INVALID_FILE"

});

}

console.error(`[REQ_ID:${req.id}]`, error.stack);

res.status(500).json({

code: "INTERNAL_ERR",

message: "Something went wrong. Please try again later.",

request_id: req.id

});

}

});

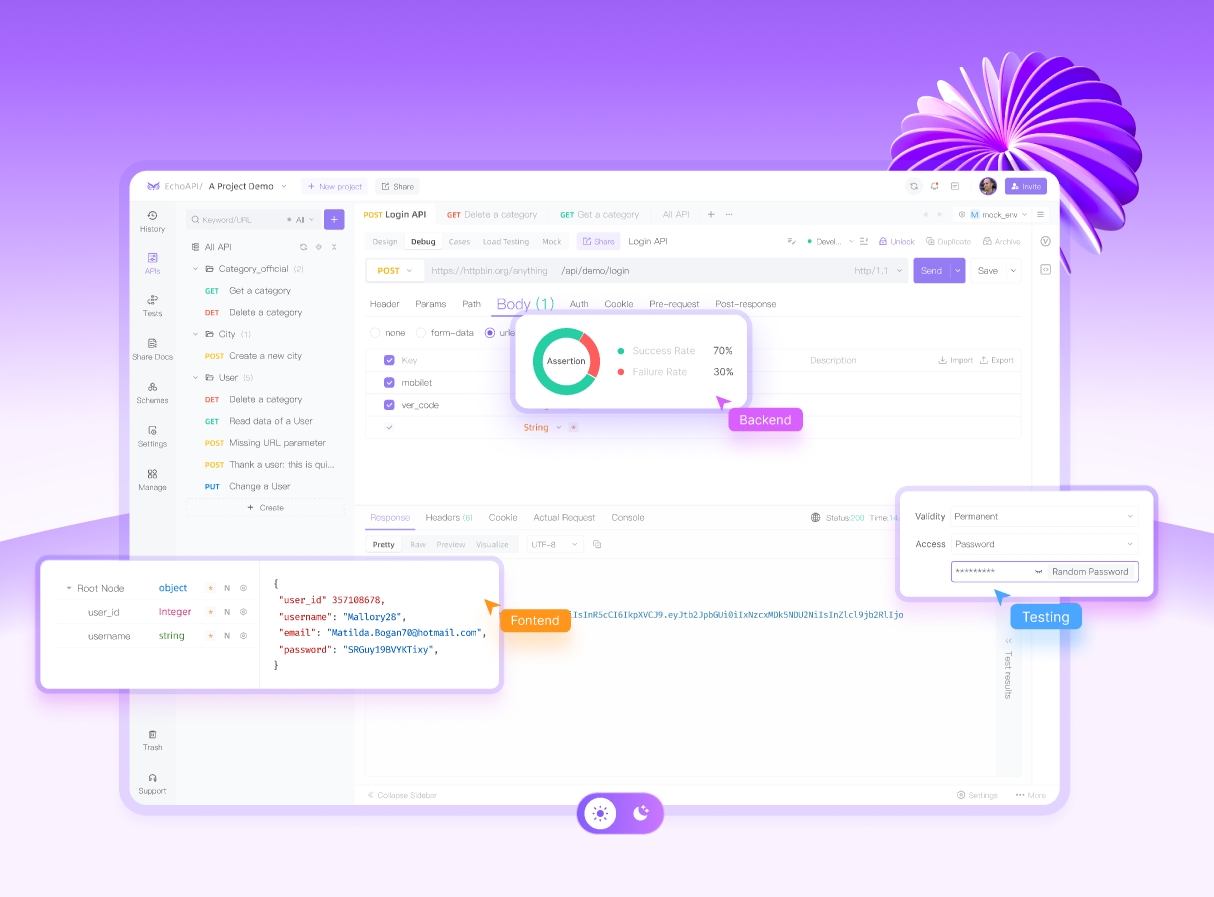

Pro Tips for Testing Errors Effectively

- Use tools like EchoAPI to simulate edge cases like invalid data, missing tokens, or malformed JSON.

- Ensure users see meaningful errors while keeping internal logs private.

- Ensure every error response has documentation coverage—you’d be amazed at how much good docs improve the user experience.

Wrapping Things Up

Building a great API isn’t just some checkbox that says “it works.” It’s about making it resilient, secure, and user-friendly during the worst-case scenarios.

Here’s a recap:

✅ Rate Limiting: The gatekeeper of your API’s stability.

✅ Error Handling: Turning mistakes into diagnostics.

✅ EchoAPI: A stress-testing and logging tool that’s always on your side.

Remember, your API isn’t judged by the best days—it’s measured by how gracefully it handles the worst. So get out there, implement these shields, and take your API from functional to bulletproof. Your future self (and your users) will thank you.

EchoAPI for VS Code

EchoAPI for VS Code

EchoAPI for IntelliJ IDEA

EchoAPI for IntelliJ IDEA

EchoAPl-Interceptor

EchoAPl-Interceptor

EchoAPl CLI

EchoAPl CLI

EchoAPI Client

EchoAPI Client API Design

API Design

API Debug

API Debug

API Documentation

API Documentation

Mock Server

Mock Server